Model evaluation

AI engineers often need to evaluate models with different parameters or prompts for comparing to ground truth and compute evaluator values from the comparisons. AI Toolkit lets you perform evaluations with minimal effort by uploading a prompts dataset.

Start an evaluation job

-

In AI Toolkit view, select TOOLS > Evaluation to open the Evaluation view

-

Select Create Evaluation, and then provide the following information:

-

Evaluation job name: default or a name you can specify

-

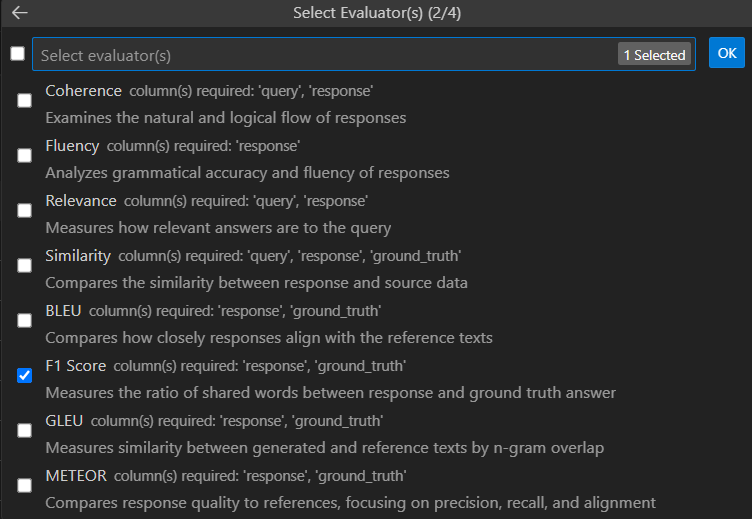

Evaluator: currently, only the built-in evaluators can be selected.

-

Judging model: a model from the list that can be selected as judging model to evaluate for some evaluators.

-

Dataset: select a sample dataset for learning purpose, or import a JSONL file with fields

query,response,ground truth.

-

-

A new evaluation job is created and you will be prompted to open your new evaluation job details

-

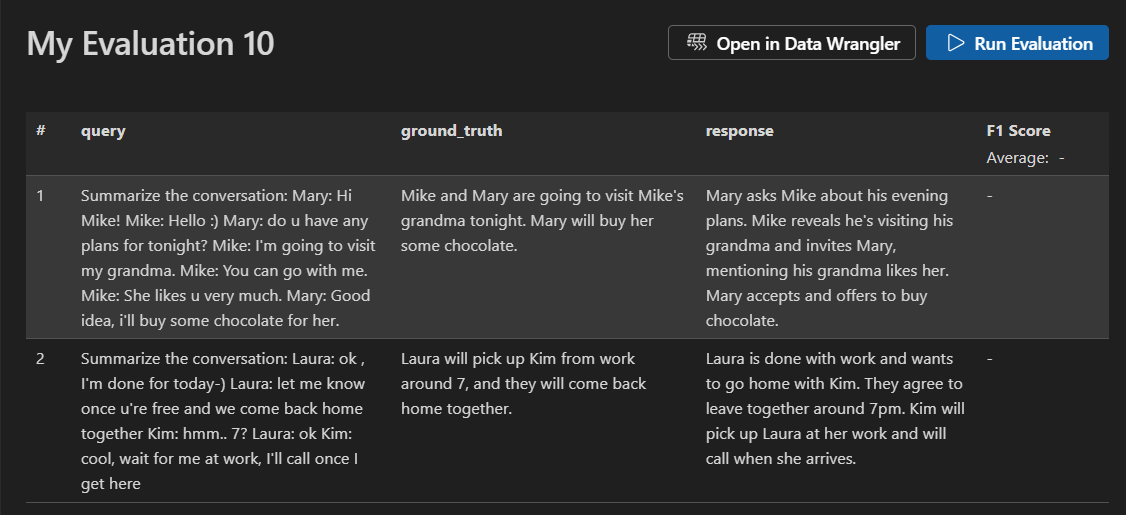

Verify your dataset and select Run Evaluation to start the evaluation.

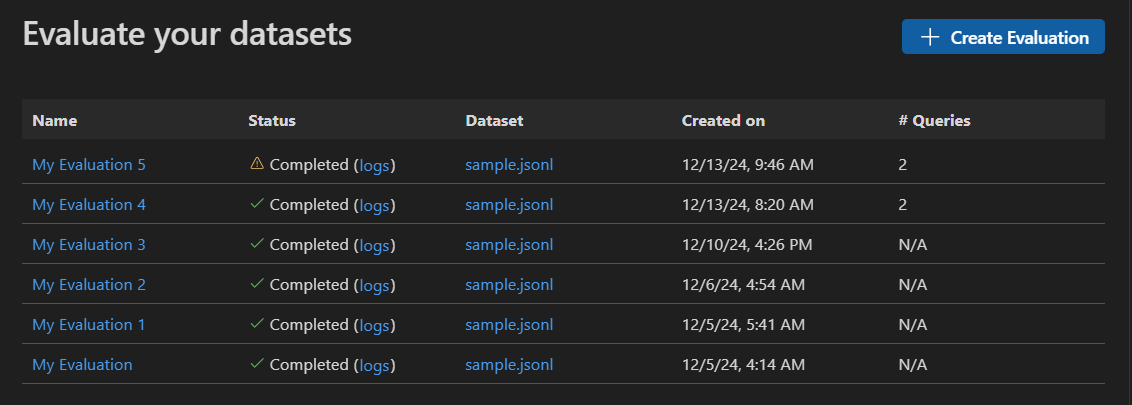

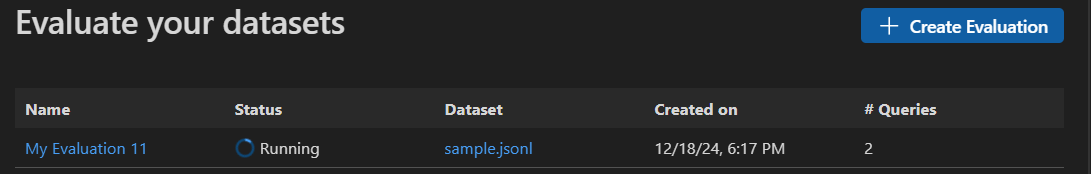

Monitor the evaluation job

Once an evaluation job is started, you can find its status from the evaluation job view.

Each evaluation job has a link to the dataset that was used, logs from the evaluation process, timestamp, and a link to the details of the evaluation.

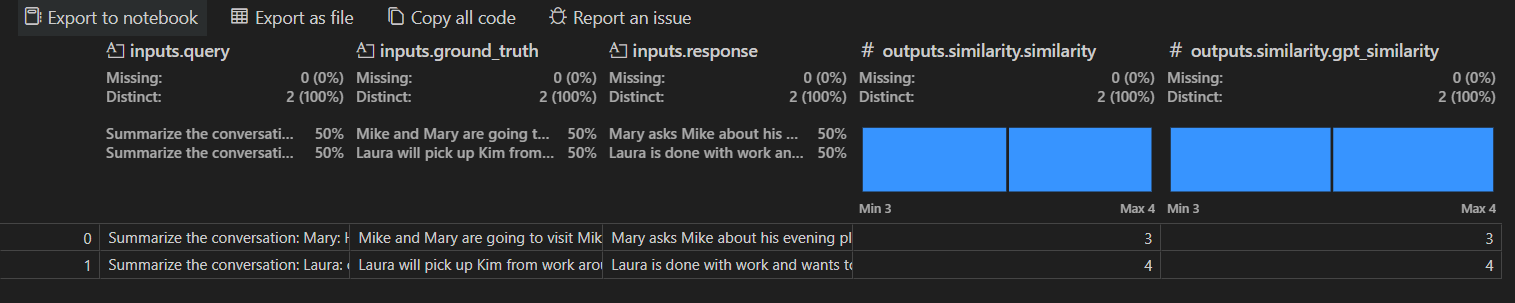

Find results of evaluation

The evaluation job details view shows a table of the results for each of the selected evaluators. Note that some results may have aggregate values.

You can also select Open In Data Wrangler to open the data with the Data Wrangler extension.